CT Images using Constrast Classification,CNN

MRI / CT 를 찍게 되면 조영제(Contrast)를 사용하게 되는데 사용한 그림인지 아닌지 구분하는 코드.

CT(Iamages using Contrast Classification CNN with Keras(tensorflow))

- CT images

- Total images : 100

- Training Dataset: 90

- Test Dataset: 10

import numpy as np # matrix tools

import matplotlib.pyplot as plt # for basic plots

import seaborn as sns # for nicer plots

import pandas as pd

from glob import glob

import re

from skimage.io import imread

import os

import keras

Using TensorFlow backend.

%matplotlib inline

Exploratory data analysis

overview = pd.read_csv('data/overview.csv')

overview.head()

| Age | Contrast | ContrastTag | raw_input_path | id | tiff_name | dicom_name | |

|---|---|---|---|---|---|---|---|

| 0 | 60 | True | NONE | ../data/50_50_dicom_cases\Contrast\00001 (1).dcm | 0 | ID_0000_AGE_0060_CONTRAST_1_CT.tif | ID_0000_AGE_0060_CONTRAST_1_CT.dcm |

| 1 | 69 | True | NONE | ../data/50_50_dicom_cases\Contrast\00001 (10).dcm | 1 | ID_0001_AGE_0069_CONTRAST_1_CT.tif | ID_0001_AGE_0069_CONTRAST_1_CT.dcm |

| 2 | 74 | True | APPLIED | ../data/50_50_dicom_cases\Contrast\00001 (11).dcm | 2 | ID_0002_AGE_0074_CONTRAST_1_CT.tif | ID_0002_AGE_0074_CONTRAST_1_CT.dcm |

| 3 | 75 | True | NONE | ../data/50_50_dicom_cases\Contrast\00001 (12).dcm | 3 | ID_0003_AGE_0075_CONTRAST_1_CT.tif | ID_0003_AGE_0075_CONTRAST_1_CT.dcm |

| 4 | 56 | True | NONE | ../data/50_50_dicom_cases\Contrast\00001 (13).dcm | 4 | ID_0004_AGE_0056_CONTRAST_1_CT.tif | ID_0004_AGE_0056_CONTRAST_1_CT.dcm |

len(overview)

100

overview['Contrast'] = overview['Contrast'].map(lambda x: 1 if x else 0)

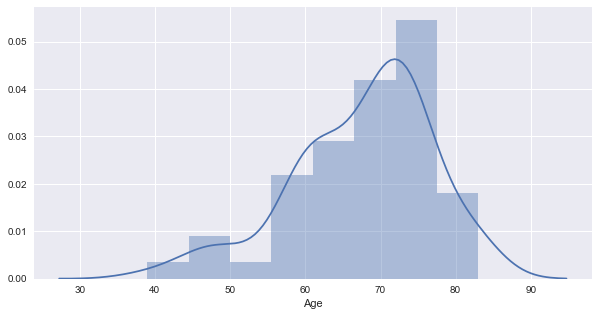

plt.figure(figsize=(10,5))

sns.distplot(overview['Age'])

<matplotlib.axes._subplots.AxesSubplot at 0x17aac249f28>

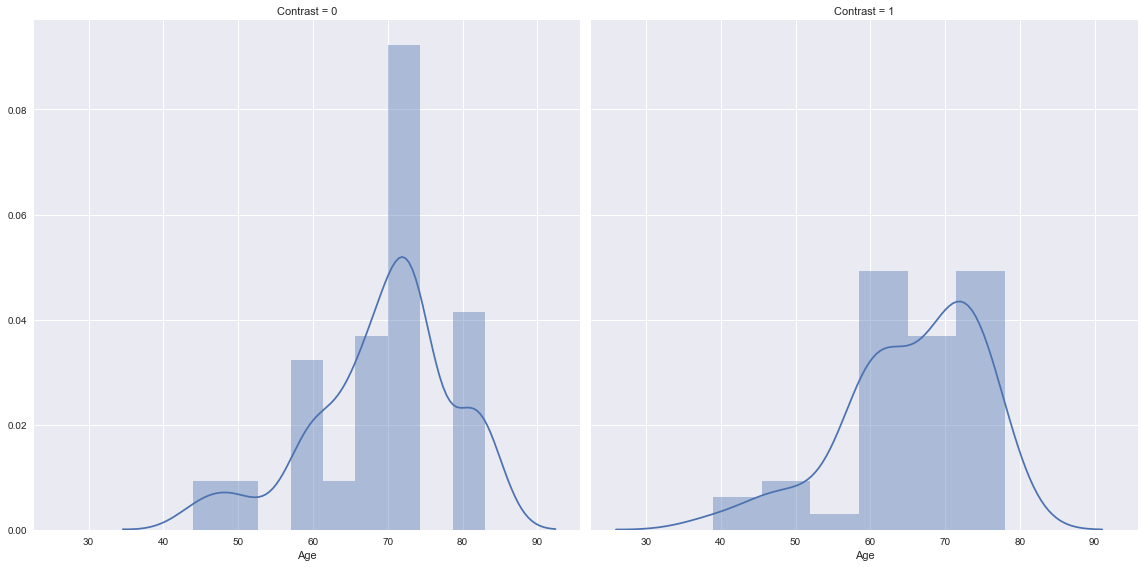

g = sns.FacetGrid(overview, col="Contrast", size=8)

g = g.map(sns.distplot, "Age")

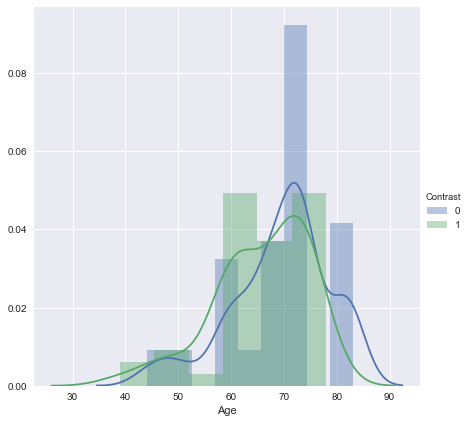

g = sns.FacetGrid(overview, hue="Contrast",size=6, legend_out=True)

g = g.map(sns.distplot, "Age").add_legend()

Read Image Files

BASE_IMAGE_PATH = os.path.join("data","tiff_images")

all_images_list = glob(os.path.join(BASE_IMAGE_PATH,"*.tif"))

all_images_list[:5]

['data\\tiff_images\\ID_0000_AGE_0060_CONTRAST_1_CT.tif',

'data\\tiff_images\\ID_0001_AGE_0069_CONTRAST_1_CT.tif',

'data\\tiff_images\\ID_0002_AGE_0074_CONTRAST_1_CT.tif',

'data\\tiff_images\\ID_0003_AGE_0075_CONTRAST_1_CT.tif',

'data\\tiff_images\\ID_0004_AGE_0056_CONTRAST_1_CT.tif']

- np.expand_dims -> Expand the shape of an array.

- Insert a new axis, corresponding to a given position in the array shape.

imread(all_images_list[0]).shape

(512, 512)

np.array(np.arange(81)).reshape(9,9)

array([[ 0, 1, 2, 3, 4, 5, 6, 7, 8],

[ 9, 10, 11, 12, 13, 14, 15, 16, 17],

[18, 19, 20, 21, 22, 23, 24, 25, 26],

[27, 28, 29, 30, 31, 32, 33, 34, 35],

[36, 37, 38, 39, 40, 41, 42, 43, 44],

[45, 46, 47, 48, 49, 50, 51, 52, 53],

[54, 55, 56, 57, 58, 59, 60, 61, 62],

[63, 64, 65, 66, 67, 68, 69, 70, 71],

[72, 73, 74, 75, 76, 77, 78, 79, 80]])

np.array(np.arange(81)).reshape(9,9)[::3,::3]

array([[ 0, 3, 6],

[27, 30, 33],

[54, 57, 60]])

np.expand_dims(imread(all_images_list[0])[::4,::4],0).shape

(1, 128, 128)

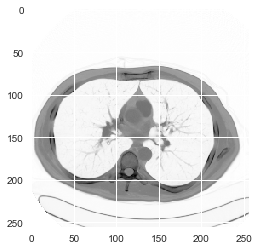

jimread = lambda x: np.expand_dims(imread(x)[::2,::2],0)

test_image = jimread(all_images_list[0])

plt.imshow(test_image[0])

<matplotlib.image.AxesImage at 0x17aacdb8978>

check_contrast = re.compile(r'data\\tiff_images\\ID_([\d]+)_AGE_[\d]+_CONTRAST_([\d]+)_CT.tif')

label = []

id_list = []

for image in all_images_list:

id_list.append(check_contrast.findall(image)[0][0])

label.append(check_contrast.findall(image)[0][1])

label_list = pd.DataFrame(label,id_list)

label_list.head()

| 0 | |

|---|---|

| 0000 | 1 |

| 0001 | 1 |

| 0002 | 1 |

| 0003 | 1 |

| 0004 | 1 |

images = np.stack([jimread(i) for i in all_images_list],0)

len(images)

100

Split Data to Train, Test

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(images, label_list, test_size=0.1, random_state=0)

Reshape image Data

n_train, depth, width, height = X_train.shape

n_test,_,_,_ = X_test.shape

n_train,depth, width, height

(90, 1, 256, 256)

input_shape = (width,height,depth)

input_shape

(256, 256, 1)

input_train = X_train.reshape((n_train, width,height,depth))

input_train.shape

input_train.astype('float32')

input_train = input_train / np.max(input_train)

input_train.max()

1.0

input_test = X_test.reshape(n_test, *input_shape)

input_test.astype('float32')

input_test = input_test / np.max(input_test)

output_train = keras.utils.to_categorical(y_train, 2)

output_test = keras.utils.to_categorical(y_test, 2)

output_train[5]

array([ 0., 1.])

input_train.shape

(90, 256, 256, 1)

Network

from keras.models import Sequential

from keras.layers import Dense, Flatten

from keras.optimizers import Adam

from keras.layers import Conv2D, MaxPooling2D

model = Sequential()

model.add(Conv2D(32, (4, 4), activation='relu', input_shape=input_shape))

# 32개의 4x4 Filter 를 이용하여 Convolutional Network생성

model.add(MaxPooling2D(pool_size=(2, 2))) # 2x2 Maxpooling

model.add(Flatten()) # 쭉풀어서 Fully Connected Neural Network를 만든다.

model.add(Dense(2, activation='softmax'))

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_5 (Conv2D) (None, 253, 253, 32) 544

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 126, 126, 32) 0

_________________________________________________________________

flatten_4 (Flatten) (None, 508032) 0

_________________________________________________________________

dense_4 (Dense) (None, 2) 1016066

=================================================================

Total params: 1,016,610

Trainable params: 1,016,610

Non-trainable params: 0

_________________________________________________________________

model.compile(loss='categorical_crossentropy',

optimizer=Adam(),

metrics=['accuracy'])

batch_size = 20

epochs = 40

history = model.fit(input_train, output_train,

batch_size=batch_size,

epochs=epochs,

verbose=1,

validation_data=(input_test, output_test))

Train on 90 samples, validate on 10 samples

Epoch 1/40

90/90 [==============================] - 1s - loss: 3.5470 - acc: 0.4667 - val_loss: 0.7117 - val_acc: 0.5000

Epoch 2/40

90/90 [==============================] - 0s - loss: 0.8217 - acc: 0.7444 - val_loss: 2.1616 - val_acc: 0.6000

Epoch 3/40

90/90 [==============================] - 0s - loss: 0.5779 - acc: 0.7889 - val_loss: 0.4329 - val_acc: 0.7000

Epoch 4/40

90/90 [==============================] - 0s - loss: 0.4233 - acc: 0.8000 - val_loss: 0.7214 - val_acc: 0.7000

Epoch 5/40

90/90 [==============================] - 0s - loss: 0.2544 - acc: 0.9000 - val_loss: 0.7363 - val_acc: 0.7000

Epoch 6/40

90/90 [==============================] - 0s - loss: 0.0576 - acc: 1.0000 - val_loss: 0.2655 - val_acc: 0.9000

Epoch 7/40

90/90 [==============================] - 0s - loss: 0.0904 - acc: 0.9667 - val_loss: 0.2782 - val_acc: 0.9000

Epoch 8/40

90/90 [==============================] - 0s - loss: 0.0265 - acc: 1.0000 - val_loss: 0.5291 - val_acc: 0.8000

Epoch 9/40

90/90 [==============================] - 0s - loss: 0.0323 - acc: 1.0000 - val_loss: 0.6118 - val_acc: 0.8000

Epoch 10/40

90/90 [==============================] - 0s - loss: 0.0242 - acc: 1.0000 - val_loss: 0.4871 - val_acc: 0.8000

Epoch 11/40

90/90 [==============================] - 0s - loss: 0.0131 - acc: 1.0000 - val_loss: 0.3767 - val_acc: 0.8000

Epoch 12/40

90/90 [==============================] - 0s - loss: 0.0112 - acc: 1.0000 - val_loss: 0.3334 - val_acc: 0.9000

Epoch 13/40

90/90 [==============================] - 0s - loss: 0.0103 - acc: 1.0000 - val_loss: 0.3521 - val_acc: 0.9000

Epoch 14/40

90/90 [==============================] - 0s - loss: 0.0083 - acc: 1.0000 - val_loss: 0.3828 - val_acc: 0.8000

Epoch 15/40

90/90 [==============================] - 0s - loss: 0.0069 - acc: 1.0000 - val_loss: 0.4158 - val_acc: 0.8000

Epoch 16/40

90/90 [==============================] - 0s - loss: 0.0060 - acc: 1.0000 - val_loss: 0.4394 - val_acc: 0.8000

Epoch 17/40

90/90 [==============================] - 0s - loss: 0.0055 - acc: 1.0000 - val_loss: 0.4422 - val_acc: 0.8000

Epoch 18/40

90/90 [==============================] - 0s - loss: 0.0050 - acc: 1.0000 - val_loss: 0.4348 - val_acc: 0.8000

Epoch 19/40

90/90 [==============================] - 0s - loss: 0.0046 - acc: 1.0000 - val_loss: 0.4296 - val_acc: 0.8000

Epoch 20/40

90/90 [==============================] - 0s - loss: 0.0043 - acc: 1.0000 - val_loss: 0.4386 - val_acc: 0.8000

Epoch 21/40

90/90 [==============================] - 0s - loss: 0.0040 - acc: 1.0000 - val_loss: 0.4428 - val_acc: 0.8000

Epoch 22/40

90/90 [==============================] - 0s - loss: 0.0037 - acc: 1.0000 - val_loss: 0.4448 - val_acc: 0.8000

Epoch 23/40

90/90 [==============================] - 0s - loss: 0.0035 - acc: 1.0000 - val_loss: 0.4392 - val_acc: 0.8000

Epoch 24/40

90/90 [==============================] - 0s - loss: 0.0032 - acc: 1.0000 - val_loss: 0.4443 - val_acc: 0.8000

Epoch 25/40

90/90 [==============================] - 0s - loss: 0.0030 - acc: 1.0000 - val_loss: 0.4493 - val_acc: 0.8000

Epoch 26/40

90/90 [==============================] - 0s - loss: 0.0029 - acc: 1.0000 - val_loss: 0.4531 - val_acc: 0.8000

Epoch 27/40

90/90 [==============================] - 0s - loss: 0.0027 - acc: 1.0000 - val_loss: 0.4548 - val_acc: 0.8000

Epoch 28/40

90/90 [==============================] - 0s - loss: 0.0025 - acc: 1.0000 - val_loss: 0.4542 - val_acc: 0.8000

Epoch 29/40

90/90 [==============================] - 0s - loss: 0.0024 - acc: 1.0000 - val_loss: 0.4493 - val_acc: 0.8000

Epoch 30/40

90/90 [==============================] - 0s - loss: 0.0023 - acc: 1.0000 - val_loss: 0.4466 - val_acc: 0.8000

Epoch 31/40

90/90 [==============================] - 0s - loss: 0.0022 - acc: 1.0000 - val_loss: 0.4540 - val_acc: 0.8000

Epoch 32/40

90/90 [==============================] - 0s - loss: 0.0021 - acc: 1.0000 - val_loss: 0.4581 - val_acc: 0.8000

Epoch 33/40

90/90 [==============================] - 0s - loss: 0.0020 - acc: 1.0000 - val_loss: 0.4665 - val_acc: 0.8000

Epoch 34/40

90/90 [==============================] - 0s - loss: 0.0019 - acc: 1.0000 - val_loss: 0.4746 - val_acc: 0.8000

Epoch 35/40

90/90 [==============================] - 0s - loss: 0.0018 - acc: 1.0000 - val_loss: 0.4781 - val_acc: 0.8000

Epoch 36/40

90/90 [==============================] - 0s - loss: 0.0017 - acc: 1.0000 - val_loss: 0.4788 - val_acc: 0.8000

Epoch 37/40

90/90 [==============================] - 0s - loss: 0.0016 - acc: 1.0000 - val_loss: 0.4752 - val_acc: 0.8000

Epoch 38/40

90/90 [==============================] - 0s - loss: 0.0016 - acc: 1.0000 - val_loss: 0.4780 - val_acc: 0.8000

Epoch 39/40

90/90 [==============================] - 0s - loss: 0.0015 - acc: 1.0000 - val_loss: 0.4765 - val_acc: 0.8000

Epoch 40/40

90/90 [==============================] - 0s - loss: 0.0014 - acc: 1.0000 - val_loss: 0.4762 - val_acc: 0.8000

history.history

{'acc': [0.46666666865348816,

0.74444445636537337,

0.7888888981607225,

0.8000000052981906,

0.9000000026490953,

1.0,

0.96666665871938073,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0,

1.0],

'loss': [3.5469898117913141,

0.82166123059060836,

0.57785466478930581,

0.42333625257015228,

0.25443292326397365,

0.057624416632784739,

0.090415256718794509,

0.026549512313471899,

0.032302634583579168,

0.024231223803427484,

0.013135068325532807,

0.011229113986094793,

0.010349845927622583,

0.008280523535278108,

0.0068697543659557896,

0.0060073141939938068,

0.0054577676993277334,

0.0050347024031604333,

0.0046245976765122675,

0.0042539972087575328,

0.0039954457121590776,

0.003706983601053556,

0.0034763599849409526,

0.0032460537428657212,

0.0030377271792127025,

0.0028651561846749652,

0.0027027277586360774,

0.0025377526568869748,

0.0024104245369219119,

0.0023004989036255414,

0.0021699113098697532,

0.0020625931210815907,

0.0019607565417471859,

0.0018652590612570445,

0.0017944105202332139,

0.0017089455440226528,

0.0016356468743955095,

0.0015679198097334141,

0.0015029058429516023,

0.0014405033240715663],

'val_acc': [0.5,

0.60000002384185791,

0.70000004768371582,

0.70000004768371582,

0.70000004768371582,

0.89999997615814209,

0.89999997615814209,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.89999997615814209,

0.89999997615814209,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896,

0.80000001192092896],

'val_loss': [0.71169030666351318,

2.1615970134735107,

0.4328601062297821,

0.72143769264221191,

0.736328125,

0.26548981666564941,

0.27816122770309448,

0.529060959815979,

0.61176717281341553,

0.4870985746383667,

0.37665331363677979,

0.33338609337806702,

0.35212856531143188,

0.38279733061790466,

0.41582024097442627,

0.43944761157035828,

0.44218528270721436,

0.43482279777526855,

0.42955672740936279,

0.43860852718353271,

0.44281277060508728,

0.44484323263168335,

0.43915966153144836,

0.44429975748062134,

0.44934400916099548,

0.45312052965164185,

0.45475301146507263,

0.45418411493301392,

0.4492676854133606,

0.44664955139160156,

0.4540124237537384,

0.45808041095733643,

0.46652591228485107,

0.47463798522949219,

0.47812485694885254,

0.47876393795013428,

0.47516763210296631,

0.4779818058013916,

0.47649335861206055,

0.47622102499008179]}

score = model.evaluate(input_test, output_test, verbose=0)

score

[0.47622102499008179, 0.80000001192092896]

Model 2

model2 = Sequential()

model2.add(Conv2D(50, (5, 5), activation='relu', input_shape=input_shape))

# 32개의 4x4 Filter 를 이용하여 Convolutional Network생성

model2.add(MaxPooling2D(pool_size=(3, 3))) # 3x3 Maxpooling

model2.add(Conv2D(30, (4, 4), activation='relu', input_shape=input_shape))

model2.add(MaxPooling2D(pool_size=(2, 2))) # 2x2 Maxpooling

model2.add(Flatten()) # 쭉풀어서 Fully Connected Neural Network를 만든다.

model2.add(Dense(2, activation='softmax'))

model2.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_8 (Conv2D) (None, 252, 252, 50) 1300

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 84, 84, 50) 0

_________________________________________________________________

conv2d_9 (Conv2D) (None, 81, 81, 30) 24030

_________________________________________________________________

max_pooling2d_9 (MaxPooling2 (None, 40, 40, 30) 0

_________________________________________________________________

flatten_6 (Flatten) (None, 48000) 0

_________________________________________________________________

dense_6 (Dense) (None, 2) 96002

=================================================================

Total params: 121,332

Trainable params: 121,332

Non-trainable params: 0

_________________________________________________________________

model2.compile(loss='categorical_crossentropy',

optimizer=Adam(),

metrics=['accuracy'])

history = model2.fit(input_train, output_train,

batch_size=batch_size,

epochs=epochs,

verbose=1,

validation_data=(input_test, output_test))

Train on 90 samples, validate on 10 samples

Epoch 1/40

90/90 [==============================] - 1s - loss: 0.7125 - acc: 0.4667 - val_loss: 0.6814 - val_acc: 0.7000

Epoch 2/40

90/90 [==============================] - 0s - loss: 0.6080 - acc: 0.8556 - val_loss: 0.6586 - val_acc: 0.7000

Epoch 3/40

90/90 [==============================] - 0s - loss: 0.4500 - acc: 0.8667 - val_loss: 0.5858 - val_acc: 0.7000

Epoch 4/40

90/90 [==============================] - 0s - loss: 0.3791 - acc: 0.8333 - val_loss: 0.2891 - val_acc: 0.9000

Epoch 5/40

90/90 [==============================] - 0s - loss: 0.2775 - acc: 0.9000 - val_loss: 0.3965 - val_acc: 0.8000

Epoch 6/40

90/90 [==============================] - 0s - loss: 0.1812 - acc: 0.9556 - val_loss: 0.2022 - val_acc: 0.9000

Epoch 7/40

90/90 [==============================] - 0s - loss: 0.1389 - acc: 0.9667 - val_loss: 0.2486 - val_acc: 0.8000

Epoch 8/40

90/90 [==============================] - 0s - loss: 0.0684 - acc: 0.9778 - val_loss: 0.1031 - val_acc: 1.0000

Epoch 9/40

90/90 [==============================] - 0s - loss: 0.0485 - acc: 0.9778 - val_loss: 0.1829 - val_acc: 0.8000

Epoch 10/40

90/90 [==============================] - 0s - loss: 0.0401 - acc: 1.0000 - val_loss: 0.1561 - val_acc: 0.9000

Epoch 11/40

90/90 [==============================] - 0s - loss: 0.0177 - acc: 1.0000 - val_loss: 0.0454 - val_acc: 1.0000

Epoch 12/40

90/90 [==============================] - 0s - loss: 0.0187 - acc: 1.0000 - val_loss: 0.1317 - val_acc: 0.9000

Epoch 13/40

90/90 [==============================] - 0s - loss: 0.0100 - acc: 1.0000 - val_loss: 0.1339 - val_acc: 0.9000

Epoch 14/40

90/90 [==============================] - 0s - loss: 0.0058 - acc: 1.0000 - val_loss: 0.0540 - val_acc: 1.0000

Epoch 15/40

90/90 [==============================] - 0s - loss: 0.0045 - acc: 1.0000 - val_loss: 0.0564 - val_acc: 1.0000

Epoch 16/40

90/90 [==============================] - 0s - loss: 0.0028 - acc: 1.0000 - val_loss: 0.0769 - val_acc: 1.0000

Epoch 17/40

90/90 [==============================] - 0s - loss: 0.0024 - acc: 1.0000 - val_loss: 0.0755 - val_acc: 1.0000

Epoch 18/40

90/90 [==============================] - 0s - loss: 0.0020 - acc: 1.0000 - val_loss: 0.0629 - val_acc: 1.0000

Epoch 19/40

90/90 [==============================] - 0s - loss: 0.0016 - acc: 1.0000 - val_loss: 0.0488 - val_acc: 1.0000

Epoch 20/40

90/90 [==============================] - 0s - loss: 0.0014 - acc: 1.0000 - val_loss: 0.0434 - val_acc: 1.0000

Epoch 21/40

90/90 [==============================] - 0s - loss: 0.0013 - acc: 1.0000 - val_loss: 0.0466 - val_acc: 1.0000

Epoch 22/40

90/90 [==============================] - 0s - loss: 0.0011 - acc: 1.0000 - val_loss: 0.0457 - val_acc: 1.0000

Epoch 23/40

90/90 [==============================] - 0s - loss: 0.0010 - acc: 1.0000 - val_loss: 0.0441 - val_acc: 1.0000

Epoch 24/40

90/90 [==============================] - 0s - loss: 9.2702e-04 - acc: 1.0000 - val_loss: 0.0426 - val_acc: 1.0000

Epoch 25/40

90/90 [==============================] - 0s - loss: 8.4643e-04 - acc: 1.0000 - val_loss: 0.0412 - val_acc: 1.0000

Epoch 26/40

90/90 [==============================] - 0s - loss: 7.8929e-04 - acc: 1.0000 - val_loss: 0.0378 - val_acc: 1.0000

Epoch 27/40

90/90 [==============================] - 0s - loss: 7.1640e-04 - acc: 1.0000 - val_loss: 0.0397 - val_acc: 1.0000

Epoch 28/40

90/90 [==============================] - 0s - loss: 6.5236e-04 - acc: 1.0000 - val_loss: 0.0443 - val_acc: 1.0000

Epoch 29/40

90/90 [==============================] - 0s - loss: 6.2590e-04 - acc: 1.0000 - val_loss: 0.0465 - val_acc: 1.0000

Epoch 30/40

90/90 [==============================] - 0s - loss: 5.6936e-04 - acc: 1.0000 - val_loss: 0.0383 - val_acc: 1.0000

Epoch 31/40

90/90 [==============================] - 0s - loss: 5.2576e-04 - acc: 1.0000 - val_loss: 0.0343 - val_acc: 1.0000

Epoch 32/40

90/90 [==============================] - 0s - loss: 4.9576e-04 - acc: 1.0000 - val_loss: 0.0327 - val_acc: 1.0000

Epoch 33/40

90/90 [==============================] - 0s - loss: 4.6248e-04 - acc: 1.0000 - val_loss: 0.0337 - val_acc: 1.0000

Epoch 34/40

90/90 [==============================] - 0s - loss: 4.3219e-04 - acc: 1.0000 - val_loss: 0.0352 - val_acc: 1.0000

Epoch 35/40

90/90 [==============================] - 0s - loss: 4.0702e-04 - acc: 1.0000 - val_loss: 0.0352 - val_acc: 1.0000

Epoch 36/40

90/90 [==============================] - 0s - loss: 3.8466e-04 - acc: 1.0000 - val_loss: 0.0332 - val_acc: 1.0000

Epoch 37/40

90/90 [==============================] - 0s - loss: 3.5988e-04 - acc: 1.0000 - val_loss: 0.0334 - val_acc: 1.0000

Epoch 38/40

90/90 [==============================] - 0s - loss: 3.4036e-04 - acc: 1.0000 - val_loss: 0.0332 - val_acc: 1.0000

Epoch 39/40

90/90 [==============================] - 0s - loss: 3.2073e-04 - acc: 1.0000 - val_loss: 0.0318 - val_acc: 1.0000

Epoch 40/40

90/90 [==============================] - 0s - loss: 3.0824e-04 - acc: 1.0000 - val_loss: 0.0332 - val_acc: 1.0000

score = model2.evaluate(input_test, output_test, verbose=0)

score

[0.03319043293595314, 1.0]